Shared Task: Machine Translation Robustness

Following the first shared task on Machine Translation (MT) Robustness, we now propose a second edition, which aims at testing MT systems' robustness towards domain diversity. The language pairs are:

- German to/from English

- Japanese to/from English

This task shares the same training data as this year's news translation task, and we encourage participants of the news task to submit their systems.

GOALS

This year's task aims to evaluate a general MT system's performance in the following two scenarios:

- Zero-shot: evaluate a general MT system's performance in unseen domains at test time which is different from a training domain (e.g. news).

- Few-shot: if a few training examples are provided for a given target domain, can the general MT system leverage those training examples to improve performance on this domain while not dropping its performance on other domains?

| Release of training/dev data |

March 06, 2020 |

| Test data released |

June 15 July 10, 2020 |

| Zero-shot translation submission deadline |

June 21 July 20, 2020 (23:59 UTC-12) |

| Few-shot training data release |

June 22 July 21, 2020 |

| Few-shot translation submission deadline |

June 28 July 27, 2020 (23:59 UTC-12) |

| System description paper submission deadline |

July August 15, 2020 |

| End of evaluation |

July August 10, 2020 |

To facilitate comparability with the news task, and also to reduce the cost to participate, we share the same training data as the WMT20 news task. The focus of the robustness task is to explore novel training and modeling approaches so that a strong general MT system has robust performance at test time on multiple domains including unseen and diversified domains.

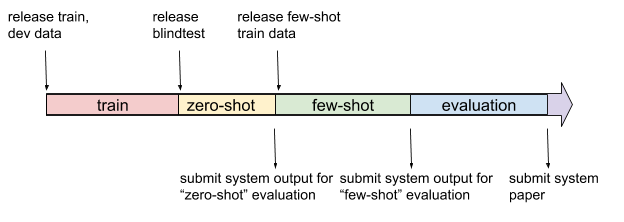

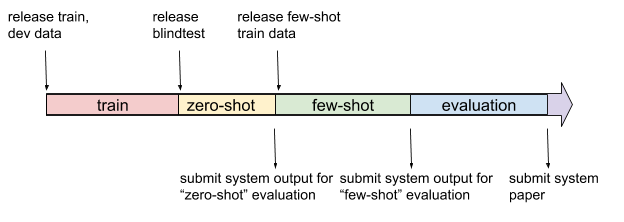

The test cycle is divided into two phases.

- In the first phase (zero-shot phase), we release blind-test from unseen domain(s), and participants submit their system's output.

- In the second phase (few-shot phase), we release a small amount of training data (~10k sentence pairs) from one of the test domains and participants submit their system's output after utilizing these training examples.

You may participate in either or both language pair.

TRAINING DATA

Constrained

You may only use the training data made available for this year's news translation task for training. You can use both the parallel data and monolingual data provided in this year's task. Multilingual system trained with data provided by WMT20 news task is also allowed (but please flag it).

We encourage participants of the news translation task to submit their systems, especially for the zero-shot phase.

Unconstrained

You may use additional monolingual data from domains such as biomedical and/or Reddit.

DEVELOPMENT DATA

You may use the following data to evaluate your system's performance on unseen and multiple domains.

NEW: TEST DATA

You can download the blind test sets.

The tar archive contains 3 different sets of files for the 4 language pairs, however not every set convers every language pair:

robustness20-set1-deen.de

robustness20-set1-ende.en

robustness20-set1-enja.en

robustness20-set1-jaen.ja

robustness20-set2-enja.en

robustness20-set2-jaen.ja

robustness20-set3-deen.de

NEW: FEW SHOT TRAINING DATA

For the second stage of the evaluation, you may use additional training data to improve your system.

Test sets with references for post-evaluation analysis.

Translation output should be submitted as real case, detokenized, and in text format.

For English-Japanese, your raw text output needs to be segmented with Kytea (version 0.4.7 recommended), first:

kytea -model /path/to/kytea/share/kytea/model.bin -out tok YOUR_OUTPUT > YOUR_OUTPUT_TOK

Please upload this file to the website following steps below:

- Go to the website ocelot.mteval.org.

- Create an account

- Go to "Create Submssion" to submit translations for a language pair.

- select as test set "robustness2020" and the language pair you are submitting

- select "create new system"

- click "continue"

- on the next page, upload your file and add some description. Don't forget to indicate whether you are submitting a constrained or unconstrained system.

If you are submitting contrastive runs, please submit your primary system first and mark it clearly as the primary submission.

For system description paper submission, please follow the instruction in PAPER SUBMISSION INFORMATION.

Evaluation will be done both automatically as well as by human judgement. Zero-shot and few-shot systems will be evaluated and compared separately.

- Manual Scoring: We will collect subjective judgments about translation quality from human annotators.

- We expect the translated submissions to be in recased, detokenized, text format, just as in most other translation campaigns (NIST, TC-Star).

- Xian Li, Facebook

- Yonatan Belinkov, Harvard and MIT

- Nadir K. Durrani, QCRI

- Philipp Koehn, JHU

- Hany Hassan, Microsoft

- Paul Michel, Carnegie Mellon University

- Graham Neubig, Carnegie Mellon University

- Juan Pino, Facebook

- Hassan Sajjad, QCRI

- Lucia Specia, Imperial College London (and Facebook)

- Zhenhao Li, Imperial College London

Questions or comments can be posted at wmt-tasks@googlegroups.com.